Founding Fathers (and Mothers)

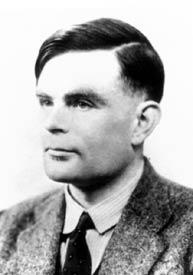

Alan Turing

(1912 - 1954)

Alan

Turing is the father of modern computing. In 1937 he published a paper

on a machine, later called the Turing machine. It described a machine

that had an infinite length of tape and could move the tape back or forwards

one "cell" at a time. The machine could do anything with the

data on the tape, add numbers, perform statistical functions, organise

recipes.....

Alan

Turing is the father of modern computing. In 1937 he published a paper

on a machine, later called the Turing machine. It described a machine

that had an infinite length of tape and could move the tape back or forwards

one "cell" at a time. The machine could do anything with the

data on the tape, add numbers, perform statistical functions, organise

recipes.....

Later Turing realised that the tape itself could contain the instructions for what to do to the data and the Universal Turing Machine was born. What does this have to do with computing? You're using a UTM right now, reading this web page. All computers are incarnations of UTM, albeit with finite memory. The interesting thing is that a UTM can perform any function that a computer can and vice versa. If a UTM cannot arrive at a solution for a problem, then there is no solution. Similarly, any solution that a UTM can arrive at, so too can a computer, given enough memory.

Alan Turing is also a hero to anyone trying to get a true artificially intelligent computer, like HAL 9000. He proposed a test, call the Turing test funnily enough. If a person has a conversation with a computer and a human in a way in which he cannot "look" to see which is which (for example using IRC chat over the Internet), and that person cannot distinguish between man and machine, then the computer has passed the Turing test.

Check out my Turing Machine simulator.

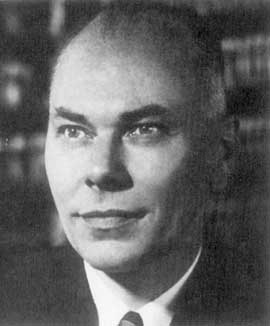

Alonzo Church

(14 June 1903 - 11 August 1995)

Alonzo Church

was a student at Princeton receiving his first degree in 1924, then his

doctorate three years later. His doctoral work was supervised by Veblen,

and he was awarded his doctorate for his dissertation entitled Alternatives

to Zermelo's Assumption.

Alonzo Church

was a student at Princeton receiving his first degree in 1924, then his

doctorate three years later. His doctoral work was supervised by Veblen,

and he was awarded his doctorate for his dissertation entitled Alternatives

to Zermelo's Assumption.

Church spent a year at Harvard University then a year at Göttingen. He returned to the USA becoming professor of mathematics at Princeton in 1929, a post he held until 1967 when he became professor of mathematics and philosophy at California.

His work is of major importance in mathematical logic, recursion theory and in theoretical computer science. He created the lambda calculus in the 1930's which today is an invaluable tool for computer scientists.

He is best remembered for Church's Theorem (1936), which shows there is no decision procedure for arithmetic. It appears in An unsolvable problem in elementary number theory published in the American Journal of Mathematics 58 (1936), 345-363. His work extended that of Gödel.

Church founded the Journal of Symbolic Logic in 1936 and remained an editor until 1979. He wrote the book Introduction to Mathematical Logic in 1956. He had 31 doctoral students including Turing, Kleene, Kemeny and Smullyan.

The Church-Turing thesis states: "Computers are capable of implementing any effectively described symbolic process." Therefore properly programmed digital computers are capable of achieving intelligence

Turing's thesis. |

Anything that is computable can be computed by a Turing machine. There does not and cannot exist a machine that can compute things a Turing machine cannot compute. |

Church's thesis. |

All the models of computation yet developed, and all those that may be developed in the future, are equivalent in power. We will not ever find a more powerful model. |

These are often combined into the Church-Turing thesis, because they are interchangeable.

What does Church's thesis mean?

The class of computable functions is exactly the class of functions computable by a Turing machine. In particular: A Turing machine can do as much (or as less) as a real computer.

Howard Aiken

(1900 - 1973)

Howard

Aiken worked on the Harvard Mark 1 (or IBM Automatic Sequence Controlled

Calculator) computer at Harvard University. It was the largest and most

complex electromechanical device ever constructed.

Howard

Aiken worked on the Harvard Mark 1 (or IBM Automatic Sequence Controlled

Calculator) computer at Harvard University. It was the largest and most

complex electromechanical device ever constructed.

It was a relay machine, which is unusual considering that his doctoral thesis was on vacuum tubes. Relays are electromechanical, but vacuum tubes are electronic, and would therefore work faster. To most computer scientists the reason is shocking. Money. Aiken tried to get two companies to build the machine. The Munroe Calculating Machine Company and RCA. Both declined and Aiken turned to IBM. In his own words.

"....if Munroe had decides to pay the bill, this thing would have been made out of mechanical parts. If RCA had been interested, it might have been electronic. And it was made out of tabulating machine parts because IBM was willing to pay the bill." [2]

Aiken was not wed to any particular technology, unlike some of his peers. As long as the machine was built, he didn't seem to care what it was made from.

The original plan was for IBM to build the machine at the university, but this proven impractical, so it was constructed at IBM's facility in Endicott, New York.

In April 1941 Aiken was called to active duty in the naval reserves while the machine was still under construction. Aiken and his supporters eventually persuaded the authorities that the computer was important to the war effort and the operation of the machine became a naval project. He received orders to transfer to Cambridge to take charge of his computer. Aiken once remarks that he was the first officer in the history of the U. S. Navy to be put in command of a computer.

However there was a fall out between Aiken and IBM's president Thomas J. Watson. A press release listed Aiken as THE inventor of the machine but did not list all the IBM engineers that had solved the everyday problems of the machine. From Aiken's point of view, he was the inventor, in that he had come up with the architecture that the machine was based on. To appease matters, a later press release listed all the IBM engineers alongside Aiken.

John von Neumann

(28th December 1903 - 8th February 1957)

Most

famous for his architecture

model that is still in use today. Neumann proposed that computers keep

their processors separate from their memories. He explained how this is

faster and more efficient. This is because the memory of a computer is

usually the slowest part (excluding the external storage, i.e. tape drive,

hard disk). The processor could work much faster if it was not "slaved"

to the memory.

He helped develop the Institute of Advanced Study (IAS) computer (pictured). It was the first stored program computer to adopt a parallel transmission scheme. As you can see, computer scientists aren't very creative with their acronyms. He knew of Turing's UTM ideas, but whether he applied them to the IAS is questionable. Very little remains of the old computers, least of all descent plans and circuit diagrams. Most computers were designed only in vague ways and the details were worked out during the construction. It is known that he visited the Harvard Mark I and found it impossibly slow.

Neumann was an indispensable member of the Manhattan project.

For an in-depth biography of John von Neumann visit here.

Tommy Flowers

(1905-1998)

|

|

Who? You might well ask. Tommy Flowers was a GPO technician during the second world war. He was sent to a top secret installation at Bletchley Park, called Station X. Station X had been given the laughably simple task. Break the ENIGMA code.

He designed and built the machine known as COLOSSUS in 1943. It is arguably the first computer in the world. Colossus used a tape reel in a loop and searched the ENIGMA codes for clues as to how to decipher them. It had five channels on the tape and the machine processed them all in parallel using the tape itself for synchronisation. The machine ran at 5000 characters per second, or about 60 mph on the tape, whichever you prefer. This was very impressive considering that ordinary valves were being used. Flowers worked closely with Alan Turing on the project and realised that valves would work fine, as long as they weren't turned on and off all the time.

Give the same problem of code cracking, using the same program, to a top of the range Pentium PC, and the COLOSSUS would beat it with one hand tied behind its back.

The reason that no-one remembers him is that COLOSSUS and station X were classified until 1974, by which time the Americans had been telling everyone that the first computer was the ENIAC for years. These days, nobody that much cares. That is to say, the Americans lost the argument and want us to stop going on about it.

Dr Tommy Flowers was recently involved in the COLOSSUS rebuild project.

Grace Murray Hopper

(1906 - 1992)

Grace Hopper assisted Howard Aiken in building the MARK series of computers at Harvard University. They began with the Mark I in 1944. The Mark 1 was a giant roomful of noisy, clicking, metal parts, 55 feet long and 8 feet high. The 5 ton device contained almost 760,000 separate pieces. It was used, by the US Navy, for gunnery and ballistic calculations, and kept in operation until 1959.

The following text is taken from the Grace Murray Hopper biography page. [3]

Pursuing her belief that computer programs could be written in English, Admiral hopper moved forward with the development for UNIVAC of the B-O compiler, later known as FLOW-MATIC. It was designed to translate a language that could be used for typical business tasks like automatic billing and payroll calculation. Using FLOW-MATIC, Admiral Hopper and her staff were able to make the UNIVAC I and II "understand" twenty statements in English. When she recommended that an entire programming language be developed using English words, however, she "was told very quickly that [she] couldn't do this because computers didn't understand English." It was three years before her idea was finally accepted; she published her first compiler paper in 1952.

Admiral Hopper actively participated in the first meetings to formulate specifications for a common business language. She was one of the two technical advisers to the resulting CODASYL Executive Committee, and several of her staff were members of the CODASYL Short Range Committee to define the basic COBOL language design. The design was greatly influenced by FLOW-MATIC. As one member of the Short Range Committee stated, "[FLOW-MATIC] was the only business-oriented programming language in use at the time COBOL development started... Without FLOW-MATIC we probably never would have had a COBOL." The first COBOL specifications appeared in 1959.

Admiral Hopper devoted much time to convincing business managers that English language compilers such as FLOW-MATIC and COBOL were feasible. She participated in a public demonstration by Sperry Corporation and RCA of COBOL compilers and the machine independence they provided. After her brief retirement from the Navy, Admiral Hopper led an effort to standardise COBOL and to persuade the entire Navy to use this high-level computer language. With her technical skills, she lead her team to develop useful COBOL manuals and tools. With her speaking skills, she convinced managers that they should learn to use them.

Another major effort in Admiral Hopper's life was the standardisation of compilers. Under her direction, the Navy developed a set of programs and procedures for validating COBOL compilers. This concept of validation has had widespread impact on other programming languages and organisations; it eventually led to national and international standards and validation facilities for most programming languages.

Konrad Zuse

(1910-1995)

Konrad Zuse was a construction engineer for the Henschel Aircraft Company in Berlin, Germany, at the beginning of WW2. He built a series of automatic calculators, to assist him in his engineering work.

Zuse learned that one of the most difficult aspects of doing a large calculation, with either a slide rule or a mechanical adding machine, is keeping track of all intermediate results and using them, in their proper place, in later steps of the calculation, Zuse realised that an automatic-calculator device would require three basic elements a control, a memory, and a calculator for the arithmetic. This is the von Neumann architecture, but Zuse came up with it several years before von Neumann published his paper on it.

Zuse was not acquainted with the technology used in modern mechanical calculators as he came from a civil engineering background. This deficit worked to his advantage because he had to rethink the whole problem of arithmetic computation and thus hit on new and original solutions.

The following list of achievements is taken from the Inventors Of The Modern Computer website. [4]

- A mechanical only calculator/binary computer called the Z1 (1936), made for the express purpose of speeding Zuse with his lengthy engineering calculations. The Z1 was Zuse's test model, he used it to explore several, groundbreaking, technologies, in calculator development: on the software side there was program control, using the binary system of numbers and floating point arithmetic, a high-capacity memory, and modules or relays operating on the yes/no principle. Not all of Zuse's ideas were fully implemented in the Z1, Zuse succeeded more with each prototype, but he had his ideas early.

- In 1939, Zuse completed the Z2, the first, fully functioning, electromechanical computer, which was able to complete his design for using relay type operations.

- The Z3 (completed December 5, 1941, and made from recycled materials donated by fellow university staff and students) was the world's first, electronic, fully programmable, computer. The Z3 was based on relays and was very sophisticated for its time, it utilised the binary number system and could handle floating-point arithmetic. Paper was in short supply in Germany during to the war, so instead of using paper tape or punched cards, Zuse used old movie film to store his programs and data with the Z3.

- The first algorithmic programming language, called "Plankalkül", was developed by Konrad Zuse, in 1946, with which he wrote a chess playing program. The "Plankalkül" included arrays and records, and used a style of assignment (storing the value of an expression in a variable), in which the new value appears on the right. An array is a collection of identically typed, data items, distinguished by their indices (or "subscripts"), for example written something like A[i,j,k], where A is the array name and i, j and k are the indices. Arrays are appropriate for storing data, which must be accessed in an unpredictable order in contrast to lists, which are best when accessed sequentially.

- The Z4 (finished in 1949), escaped being destroyed, like the Z1 through Z3, by being smuggled from Germany in a horse drawn cart and hidden in stables on route to Zurich, Switzerland, where Zuse completed and installed the Z4 in the Applied Mathematics Division of Zurich's Federal Polytechnical Institute, where it was used until 1955. It had a mechanical memory with a capacity of 1,024 words, several card readers (Zuse no longer had to use movie film) and punches, and various facilities to enable flexible programming (address translation, conditional branching).

Bob Noyce

(1927 -1990)

Bob Noyce is credited with creating Silicon Valley according to the author of Accidental Empires, Robert X. Cringely. Most of the information here is taken from that book, which was broadcast as "The Triumph of the Nerds" by Channel 4.

William Shockley had invented the transistor at Bell Labs in the late 1940s and by the 1950s was on his own building transistors in California. Shockley was a good scientist, but a bad manager.

"He posted a list of salaries on the bulletin board, pissing off those who were being paid less for the same work. When work wasn't going well, he blamed sabotage and demanded lie detector tests" [5]

Bob Noyce and seven other engineers quit en masse from Shockley Semiconductor. They started Fairchild Semiconductor, the archetype for every Silicon Valley start up that has followed. More than fifty semiconductor companies eventually split off from Fairchild in the same way that Fairchild had split from Shockley. That is to say, they found venture capital and start a new company.

Noyce also started another company, Intel. Intel was started because Noyce couldn't get Fairchild's eastern owners to accept that stock options should be a part of compensation for all employees, not just for managers. Noyce wanted to spread the wealth around all employees. This management style still sets the standard for every computer, software, and semiconductor company in the Valley today.

"...every CEO still wants to think that the place is being run the way Bob Noyce would have run it." [5]

But the reason that Noyce invented the integrated circuit is not to advance research or progress computers.

"..."I was lazy," he said. "It didn't make sense to have people soldering together these individual components when they could be built as a single part."...." [5]

The PBS website says that Noyce introduced three revolutions to the semiconductor industry and hence the computer industry.

"As it was, Shockley and Noyce's scientific vision -- and egos -- clashed. When seven of the young researchers at Shockley semiconductor got together to consider leaving the company, they realised they needed a leader. All seven thought Noyce, aged 29 but full of confidence, was the natural choice. So Noyce became the eighth in the group that left Shockley in 1957 and founded Fairchild Semiconductor.Noyce was the general manager of the company and while there invented the integrated chip -- a chip of silicon with many transistors all etched into it at once. That was the first time he revolutionised the semiconductor industry. He stayed with Fairchild until 1968, when he left with Gordon Moore to found Intel. At Intel he oversaw Ted Hoff's invention of the microprocessor -- that was his second revolution.

At both companies, Noyce introduced a very casual working atmosphere, the kind of atmosphere that has become a cultural stereotype of how California companies work. But along with that open atmosphere came responsibility. Noyce learned from Shockley's mistakes and he gave his young, bright employees phenomenal room to accomplish what they wished, in many ways defining the Silicon Valley working style was his third revolution." [6]

Gordon Moore

(3rd January 1929 - )

In 1965, Gordon Moore was preparing a speech and made a memorable observation. When he started to graph data about the growth in memory chip performance, he realised there was a striking trend. Each new chip contained roughly twice as much capacity as its predecessor, and each chip was released within 18-24 months of the previous chip. If this trend continued, he reasoned, computing power would rise exponentially over relatively brief periods of time.

Moore's observation, now known as Moore's Law, described a trend that has continued and is still remarkably accurate. It is the basis for many planners' performance forecasts. In 26 years the number of transistors on a chip has increased more than 3,200 times, from 2,300 on the 4004 in 1971 to 7.5 million on the Pentium¨ II processor.

Taken from the Intel website.

Moore co-founded Intel in 1968, serving initially as Executive Vice President. He became President and Chief Executive Officer in 1975 and held that post until elected chairman and Chief Executive Officer in 1979. He remained CEO until 1987 and was named Chairman Emeritus in 1997.

Moore is widely known for "Moore's Law," in which he predicted that the number of transistors that the industry would be able to place on a computer chip would double every year. In 1995, he updated his prediction to once every two years. While originally intended as a rule of thumb in 1965, it has become the guiding principle for the industry to deliver ever-more-powerful semiconductor chips at proportionate decreases in cost. [7]

Also taken from the Intel website.

Moore's law may not hold for much longer though. Transistors in silicon chips are CMOS (Complimentary Metal Oxide Semiconductors), largely due to the fact that these give out less heat than other technologies available. However CMOS will reach its limit, in terms of haw many components can be put on a single chip, in about 10 years or so. Most technology gurus are estimating 2010 for the year when CMOS reaches its limits.

Gordon Moore has been advocating the need for another technology to replace CMOS for years, and now it may be too late. This is because it normally takes about 10 - 15 years to put new technologies into commercial production. The industry is rapidly running out of time and no-one seems to be rushing around trying to fix the problem. In all probability, it looks like another Y2K problem all over again. Companies will rush to fix the problem only when it has cleared the horizon and is trundling inevitably towards them. [8]

|

Gordon Moore, like everyone at Intel, doesn't have an office. He works from a cubicle. |

Frederic Calland Williams (1911 - 1977) and Tom Kilburn (1921 - 2001)

The reader may be wondering why these two don't have individual entries. The reason is that for most of their time, they worked together on projects including the Manchester Baby.

The Williams-Kilburn Tube and the Baby (1947-48)

In December 1946 Freddie Williams moved to the University of Manchester to take up a chair in the Department of Electro-technics, later the Department of Electrical Engineering. Tom Kilburn was seconded to Manchester by TRE at the same time so that they could continue to work on the digital storage of information on a Cathode Ray Tube. It was known worldwide that an effective electronic storage mechanism was essential to the progress of electronic digital computers, and Freddie Williams thought they could solve the problem using CRTs.

In late 1946 Freddie Williams had succeeded in storing one bit on a CRT. Tom Kilburn spent the first few weeks of 1947 transferring the whole of the equipment for the experiment up to Manchester. He was helped in this by a second person seconded from TRE, Arthur Marsh, who soon left, and was replaced after a few months by Geoff Tootill, who was Tom Kilburn's full-time assistant for the next two years. By March Tom Kilburn had suggested an improved method of storing bits, and by the end of 1947 they were able to store 2048 bits on a CRT, having explored various techniques. They were now ready to build a small computer round this storage device, subsequently called the Williams Tube, though Williams-Kilburn Tube would have been more accurate. In order to justify a second year of secondment, Tom Kilburn submitted a report to the TRE management. This report, A Storage System for use with Binary Digital Computing Machines (Progress report issued 1st December 1947), was circulated widely and was influential in several American and Russian organisations adopting the Manchester-developed CRT storage system.

Tom Kilburn was already familiar with electronic computing using analogue devices from his experience with radar. He first heard about digital computing machines in 1945 when mercury filled delay lines were being designed as serial storage devices. In early 1947 he attended a series of lectures at the National Physical Laboratory (NPL) given by Alan Turing and attended by some 20 people. Turing had completed the design of NPL's computer by then, which was based on Mercury Acoustic Delay Lines as the storage device, and the lectures were about the specific details of his design. If Kilburn was influenced by the lectures, it was mainly indirectly, as he resolved not to build a computing machine "like that".

On into 1948 Tom Kilburn led the work on designing and building a Small Scale Experimental Machine, "The Baby". This tested in practice the ability of the Williams-Kilburn Tube to read and reset at speed random bits of information, while preserving a bit's value indefinitely between resettings. And for the first time in the world a computer was built that could hold any (small!) user program in electronic storage and process it at electronic speeds. He wrote the first program for it, which first worked on June 21st 1948.

In late 1948 Tom Kilburn joined the staff of the Electrical Engineering department and was awarded a Ph.D. for his work on the Williams-Kilburn Tube and the Baby.

The Manchester and Ferranti Mark 1s (1948-51)

The next step was to build a computer around one or more Williams-Kilburn Tubes, both so that the speed and reliability of the Tube could be tested, and so that (if it proved satisfactory) the aim of the world's computer researchers for a computer with an effective electronic storage for both program and data could be realised. So with Kilburn as the main driving force behind the computer design, the Small-Scale Experimental Machine, the "Baby", was designed and built in 1947 and 1948, proving the effectiveness of both the Williams-Kilburn Tube design and the stored-program computer. It ran its first program in June 1948.

By the autumn of 1948 an expanded team had been set up under Freddie Williams to design and build a usable computer, the Manchester Mark 1, and the government had awarded a contract to Ferranti Ltd. to manufacture a commercial computer based on it. The Manchester Mark 1 was fully operational by around October 1949, and included many new features, including a magnetic drum backing store. By this time the detailed design was already being handed over to Ferranti, to produce a machine with a number of enhancements and improved engineering, the Ferranti Mark 1. The first machine came off the production line early in 1951, the world's first commercially available computer.

Freddie Williams and his team repeated the achievment of 1947, of devising and building the first working Random Access Memory in a computer, by designing and building the first working high-speed magnetic backing store attached to a computer, the Manchester Mark 1 drum. Drum stores were also being worked on elsewhere, in particular by A.D. Booth of Birkbeck College, London, who provided useful input. The gathering of the raw material for the first drum showed Freddie Williams in typical practical free-thinking form. As Tommy Thomas recalls: the drum itself was scrounged from discarded equipment from another department, a motor attached in the department, and the magnetic surface applied by the motorcycle electroplating shop across the road from his office! Freddie Williams took a particular interest in the development of the drum, carrying out with J.C. (Cliff) West the early experimentation on servo-mechanisms to synchronise the drum's rotation with the refresh cycle of the CRT stores.